Introduction

Recent advances in large-scale robot data have opened up possibilities for developing general-purpose

policies for tabletop manipulation, while progress in universal, cross-embodiment end-to-end navigation has

significantly advanced mobility. This convergence naturally motivates research into mobile

manipulation (MoMA), an

interdisciplinary field that integrates mobility with dexterity to enable robots to operate in large,

dynamic, and human-centric environments. Compared to tabletop setups, mobile manipulation presents distinct

challenges, including hardware design trade-offs (e.g., bimanual arms, torso structures, wheeled or legged

bases), low-level control complexities for whole-body coordination, and scalability challenges in real-world

data collection and skill learning. The field also raises fundamental questions about sim-to-real transfer,

egocentric vision, and long-horizon reasoning. Furthermore, mobile manipulators introduce new dimensions to

human-robot interaction, requiring safety and adaptability in extended workspaces. Addressing these

challenges will unlock the full potential of mobile manipulators across unstructured environments such as

homes, hospitality, logistics, gastronomy, retail, and agriculture, driving progress toward general-purpose,

intelligent robots. Key topics include:

- Tasks and New Challenges: What are the critical tasks and application domains for mobile

manipulation that should be prioritized? What additional challenges does mobile manipulation present

compared to a tabletop setup?

- Hardware Design: What is the optimal form factor for a mobile manipulator? Should we prioritize

anthropomorphic designs or appliance-like robots? Specifically, how should we decide between bimanual

arms, torso structures, wheeled bases, or legged systems?

- Low-level Control: Controlling a robot with many DoFs is a significant challenge. How can we

effectively integrate planning and control for executing low-level motions and whole-body coordination?

- Egocentric Perception and Scene Representation: What new challenges arise in mobile manipulation

with moving cameras? How does it impact the formulation of action space and visual representations in

the 3D scenes?

- Real-world Data Scaling: Can advanced human-robot interfaces enhance teleoperation and motion

capture systems for demonstration collection? How can action-free videos facilitate skill learning in

the mobile manipulation domain?

- Sim-to-Real Transfer: How can simulation accelerate learning for mobile manipulation while

ensuring effective real-world deployment? Can sim-to-real techniques developed for navigation be adapted

to mobile manipulation?

- Long-horizon Planning and Reasoning: How can mobile manipulators efficiently plan and execute

long-horizon tasks that require multiple sequential interactions and reasoning? How can large

pre-trained models (e.g., LLM, VLM, VLA, World Models) facilitate new capabilities of mobile robots?

- Human-Robot Interaction: Mobile manipulators work in the extended workspace. What new challenges

and opportunities does it pose and what safety considerations should be addressed in human-centric

environments?

By addressing these questions, the workshop aims to foster discussions on advancing next-generation

systems and learning for mobile manipulation.

Call for Papers

We are excited to announce the Call for Papers for the RSS MoMA workshop. We invite original contributions

presenting novel ideas, research, and applications relevant to the workshop’s theme.

Important Dates

| Event |

Date |

| Submission Deadline |

May 25th 11:59PM UTC-0, 2025 |

| Notification |

June 1st, 2025 |

| Camera-Ready |

June 19th 11:59PM PT, 2025 |

Submission Guidelines

- Page Limit: There are no page length requirements, but we suggest a length around 4-9

pages long. There is no limit on

the number of pages for references or appendices.

- Formatting: Submissions are encouraged to use the RSS 2025, IROS 2025, CoRL 2025,

NeurIPS 2025 templates.

- Anonymity: All submissions must be anonymized. Please remove any author names,

affiliations, or identifying information.

- Relevant Work: We welcome references to recently published, relevant work (e.g., RSS,

CoRL, ICRA, and CVPR).

- Archival Status: All accepted papers are non-archival. Submitting to multiple

non-archival workshops is permitted.

- Link: openreview

submission

Accepted papers will be presented in the form of posters at the workshop. In addition, selected papers

may be invited to deliver spotlight talks.

Paper topics

A non-exhaustive list of relevant topics:

- Benchmarks for Mobile Manipulation

- Novel Designs of Mobile Manipulator Hardware

- Low-Level, Whole-Body Control for Mobile Manipulation

- Representations for Mobile Manipulation

- Data Collection for Learning Mobile Manipulation

- Mobile Manipulation Simulations and Sim-to-Real Transfer

- Reasoning and Planning Algorithms for Mobile Manipulation

- Human-Robot Interaction in Mobile Manipulation

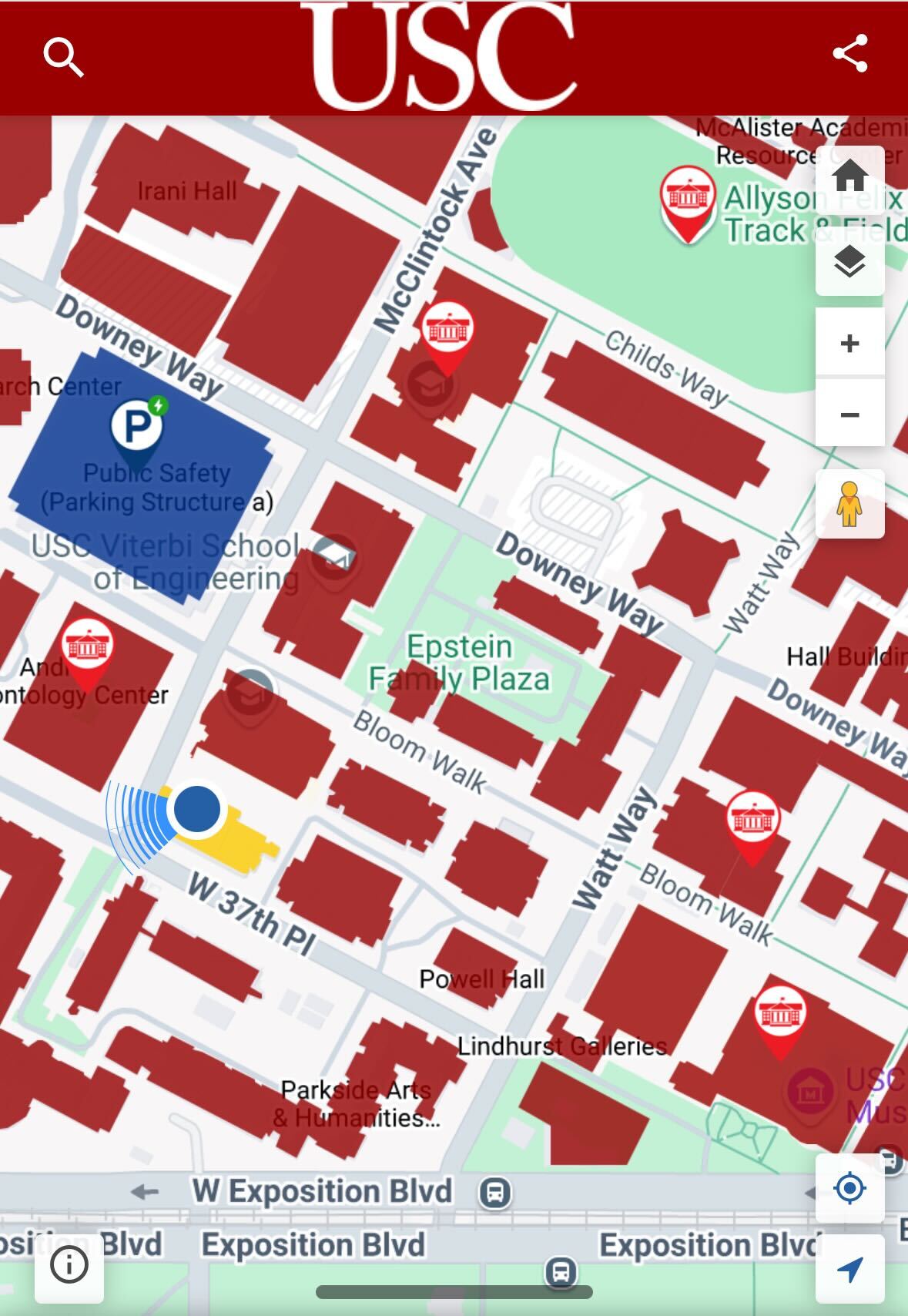

Workshop Schedule

| Start Time (PDT) |

End Time (PDT) |

Event |

| 8:45 AM |

9:00 AM |

Welcome and introduction |

| 9:00 AM |

9:25 AM |

Talk: Jeannette Bohg

Talk Title (TBD) |

| 9:25 AM |

9:50 AM |

Talk: Lerrel Pinto

Talk Title (TBD) |

| 9:50 AM |

10:15 AM |

Talk: Richard Cheng

Analyzing the bottlenecks for mobile

manipulation: Battling the heavy tail

|

| 10:15 AM |

10:40 AM |

Talk: Roberto Martín-Martín

Learning to Imitate Humans for Mobile

Manipulation Tasks

|

| 10:40 AM |

10:50 AM |

Lightning talk |

| 10:50 AM |

11:30 AM |

Coffee break with poster presentations |

| 11:35 AM |

12:30 PM |

Panel discussion |

Invited Speakers

listed alphabetically